In 1956, Arthur C. Clarke wrote "The Forgotten Enemy," a science fiction story that dealt with the return of the ice age (image source). Surely it was not Clarke's best story, but it may have been the first written on that subject by a well-known author. Several other sci-fi authors examined the same theme, but that does not mean that, at that time, there was a scientific consensus on global cooling. It just means that a consensus on global warming was obtained only later, in the 1980s. But which mechanisms were used to obtain this consensus? And why is it that, nowadays, it seems to be impossible to attain consensus on anything? This post is a discussion on this subject that uses climate science as an example.

You may remember how, in 2017, during the Trump presidency, there briefly floated in the media the idea to stage a debate on climate change in the form of a "red team vs. blue team"

encounter between orthodox climate scientists and their opponents. Climate

scientists were horrified at the idea. They were especially appalled at the military implications of the "red vs. blue" idea that hinted at how the debate could have been organized. From the government side, then, it was quickly realized that in a fair scientific debate their side had no chances. So, the debate never took place and it is good that it didn't. Maybe those who proposed it were well intentioned (or maybe not), but in any case it would have degenerated into a fight and just created confusion.

Yet, the story of that debate that was never held hints at a point that most people understand: the need for consensus. Nothing in our world can be done without some form of consensus and the question of climate change is a good example. Climate scientists tend to claim that such a consensus exists, and they sometimes quantify it as 97% or even 100%. Their opponents claim the opposite.

In a sense, they are both right. A consensus on climate change exists among scientists, but this is not true for the general public. The polls say that a majority of people know something about climate change and agree that something is to be done about it, but that is not the same as an in-depth, informed consensus. Besides, this majority rapidly disappears as soon as it is time to do something that touches someone's wallet. The result is that, for more than 30 years, thousands of the best scientists in the world have been warning humankind of a dire threat approaching, and nothing serious has been done. Only proclaims, greenwashing, and "solutions" that

worsen the problem (the "hydrogen-based economy" is a good example).

So, consensus building is a fundamental matter. You can call it a science or see it as another way to define what others call "propaganda." Some reject the very idea as a form of "mind control," or practice it in various methods of rule-based negotiations. It is a fascinating subject that goes to the heart of our existence as human beings in a complex society.

Here, instead of tackling the issue from a general viewpoint, I'll discuss a specific example: that of "global cooling" vs. "global warming," and how a consensus was obtained that warming is the real threat. It is a dispute often said to be proof that no such a thing as consensus exists in climate science.

You surely heard the story of how, just a few decades ago, "global cooling" was the generally accepted scientific view of the future. And how those silly scientists changed their minds, switching to warming, instead. Conversely, you may also have heard that this is a myth and that there never was such a thing as a consensus that Earth was cooling.

As it is always the case, the reality is more complex than politics wants it to be. Global cooling as an early scientific consensus is one of the many legends generated by the discussion about climate change and, like most legends, it is basically false. But it has at least some links with reality. It is an interesting story that tells us a lot about how consensus is obtained in science. But we need to start from the beginning.

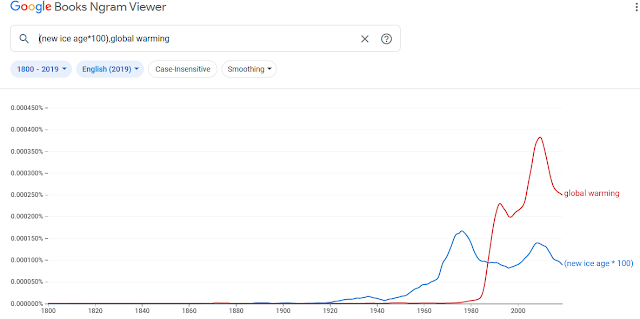

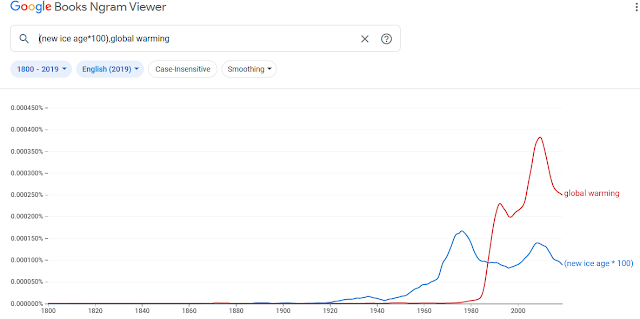

The idea that Earth's climate was not stable emerged in the mid-19th century with the discovery of the past ice ages. At that point, an obvious question was whether ice ages could return in the future. The matter remained at the level of scattered speculations until the mid 20th century, when the concept of "new ice age" appeared in the "memesphere" (the ensemble of human public memes). We can see this evolution using Google "Ngrams," a database that measures the frequency of strings of

words in a large corpus of published books (Thanks, Google!!).

You

see that the possibility of a "new ice age" entered the public consciousness already in the 1920s, then it grew and reached a peak in the early 1970s. Other strings such as "Earth cooling" and the like give similar results. Note also that the database "English Fiction" generates a

large peak for the concept of a "new ice age" at about the same time, in the 1970s. Later on, cooling was completely replaced by the concept of global warming. You can see in the figure below how the crossover arrived in the late 1980s.

Even after it started to decline, the idea of a "new ice age" remained popular and journalists loved presenting it to the public as an imminent threat. For instance,

Newsweek printed an article titled "

The Cooling World

The Cooling World" in 1975, but the concept provided good material for the catastrophic genre in fiction. As late as 2004, it was at the basis of the

movie "

The Day After Tomorrow."

Does that mean that scientists ever believed that the Earth was cooling? Of course not. There was no consensus on the matter. The status of climate science until the late 1970s simply didn't allow certainties about Earth's future climate.

As an example, in 1972, the well-known report to the Club of Rome, "The Limits to Growth," noted the growing concentration of CO2 in the atmosphere, but it did not state that it would cause warming -- evidently the issue was not yet clear even for scientists engaged in global ecosystem studies. 8 years later, in 1980, the authors of "The Global 2000 Report to the President of the U.S." commissioned by president Carter, already had a much better understanding of the climate effects of greenhouse gases. Nevertheless, they did

not rule out global cooling and they discussed it as a plausible

scenario.

The Global 2000 Report is especially interesting because it provides some data on the opinion of climate scientists as it was in 1975. 28 experts were interviewed and asked to forecast the average world temperature for the year 2000. The result was no warming or a minimal one of about 0.1 C. In the real world, though, temperatures rose by more than 0.4 C

in 2000. Clearly, in 1980, there was not such a thing as a scientific consensus on global warming. On this point, see also the paper by Peterson (2008) which analyzes the scientific literature in the 1970s. A majority of paper was found to favor global warming, but also a significant minority arguing for no temperature changes or for global cooling.

Now we are getting to the truly interesting point of this discussion. The consensus that Earth was warming did not exist before the 1980s, but then it became the norm. How was it obtained?

There are two interpretations floating in the memesphere today. One is that scientists agreed on a global conspiracy to terrorize the public about global warming in order to obtain personal advantages. The other that scientists are cold-blooded data-analyzers and that they did as John Maynard Keynes said, "When I have new data, I change my mind."

Both are legends. The one about the scientific conspiracy is obviously ridiculous, but the second is just as silly. Scientists are human beings and data are not a gospel of truth. Data are always incomplete, affected by uncertainties, and need to be selected. Try to develop Newton's law of universal gravitation without ignoring all the data about falling feathers, paper sheets, and birds, and you'll see what I mean.

In practice, science is a fine-tuned consensus-building machine. It has evolved exactly for the purpose of smoothly absorbing new data in a gradual process that does not lead (normally) to the kind of partisan division that's typical of politics.

Science uses a procedure derived from an ancient method that, in Medieval times was called disputatio and that has its roots in the art of rhetoric of classical times. The idea is to debate issues by having champions of the different theses squaring off against each other and trying to convince an informed audience using the best arguments they can muster. The Medieval disputatio could be very sophisticated and, as an example, I discussed the "Controversy of Valladolid" (1550-51) on the status of the American Indians. Theological disputationes normally failed to harmonize truly incompatible positions, say, convincing Jews to become Christians (it was tried more than once, but you may imagine the results). But sometimes they did lead to good compromises and they kept the confrontation to the verbal level (at least for a while).

In modern science, the rules have changed a little, but the idea remains the same: experts try to convince their opponents using the best arguments they can muster. It is supposed to be a discussion, not a fight. Good manners are to be maintained and the fundamental feature is being able to speak a mutually understandable language. And not just that: the discussants need to agree on some basic tenets of the frame of the discussion. During the Middle Ages, theologians debated in Latin and agreed that the discussion was to be based on the Christian scriptures. Today, scientists debate in English and agree that the discussion is to be based on the scientific method.

In the early times of science, one-to-one debates were used (maybe you remember the famous debate about Darwin's ideas that involved Thomas Huxley and Archbishop Wilberforce in 1860). But, nowadays, that is rare. The debate takes place at scientific conferences and seminars where several scientists participate, gaining or losing "prestige points" depending on how good they are at presenting their views. Occasionally, a presenter, especially a young scientist, may be "grilled" by the audience in a small re-enactment of the coming of age ceremonies of Native Americans. But, most important of all, informal discussions take place all over the conference. These meetings are not supposed to be vacations, they are functional to the face-to-face exchange of ideas. As I said, scientists are human beings and they need to see each other in the face to understand each other. A lot of science is done in cafeterias and over a glass of beer. Possibly, most scientific discoveries start in this kind of informal setting. No one, as far as I know, was ever struck by a ray of light from heaven while watching a power point presentation.

It would be hard to maintain that scientists are more adept at changing their views than Medieval theologians and older scientists tend to stick to old ideas. Sometimes you hear that science advances one funeral at a time; it is not wrong, but surely an exaggeration: scientific views do change even without having to wait for the old guard to die. The debate at a conference can decisively tilt toward one side on the basis of the brilliance of a scientist, the availability of good data, and the overall competence demonstrated.

I can testify that, at least once, I saw someone in the audience rising up after a presentation and say, "Sir, I was of a different opinion until I heard your talk, but now you convinced me. I was wrong and you are right." (and I can tell you that this person was more than 70 years old, good scientists may age gracefully, like wine). In many cases, the conversion is not so sudden and so spectacular, but it does happen. Then, of course, money can do miracles in affecting scientific views but, as long as we stick to climate science, there is not a lot of money involved and corruption among scientists is not widespread as it is in other fields, such as in medical research.

So, we can imagine that in the 1980s the consensus machine worked as it was supposed to do and it led to the general opinion of climate scientists switching from cooling to warming. That was a good thing, but the story didn't end with that. There remained to convince people outside the narrow field of climate science, and that was not obvious.

From the 1990s onward, the disputatio was dedicated to convincing non-climate scientists, that is both scientists working in different fields and intelligent laypersons. There was a serious problem with that: climate science is not a matter for amateurs, it is a field where the Dunning-Kruger effect (people overestimating their competence) may be rampant. Climate scientists found themselves dealing with various kinds of opponents. Typically, elderly scientists who refused to accept new ideas or, sometimes, geologists who saw climate science as invading their turf and resenting that. Occasionally, opponents could score points in the debate by focusing on narrow points that they themselves had not completely understood (for instance, the "tropospheric hot spot" was a fashionable trick). But when the debate involved someone who knew climate science well enough the opponents' destiny was to be easily steamrolled.

These debates went on for at least a decade. You may know the 2009 book by Randy Olson, "Don't be Such a Scientist" that describes this period. Olson surely understood the basic point of debating: you must respect your opponent if you aim at convincing him or her, and the audience, too. It seemed to be working, slowly. Progress was being made and the climate problem was becoming more and more known.

These debates went on for at least a decade. You may know the 2009 book by Randy Olson, "Don't be Such a Scientist" that describes this period. Olson surely understood the basic point of debating: you must respect your opponent if you aim at convincing him or her, and the audience, too. It seemed to be working, slowly. Progress was being made and the climate problem was becoming more and more known.

And then, something went wrong. Badly wrong. Scientists suddenly found themselves cast into another kind of debate for which they had no training and little understanding. You see in Google Ngrams how the idea that climate change was a hoax lifted off in the 2000s and became a feature of the memesphere. Note how rapidly it rose: it had a climax in 2009, with the Climategate scandal, but it didn't decline afterward.

It was a completely new way to discuss: not anymore a disputatio. No more rules, no more reciprocal respect, no more a common language. Only slogans and insults. A climate scientist described this kind of debate as like being involved in a "bare-knuckle bar fight." From there onward, the climate issue became politicized and sharply polarized. No progress was made and none is being made, right now.

Why did this happen? In large part, it was because of a professional PR campaign aimed at disparaging climate scientists. We don't know who designed it and paid for it but, surely, there existed (and still exist) industrial lobbies which were bound to lose a lot if decisive action to stop climate change was implemented. Those who had conceived the campaign had an easy time against a group of people who were as naive in terms of communication as they were experts in terms of climate science.

The Climategate story is a good example of the mistakes scientists made. If you read the whole corpus of the thousands of emails released in 2009, nowhere you'll find that the scientists were falsifying the data, were engaged in conspiracies, or tried to obtain personal gains. But they managed to give the impression of being a sectarian clique that refused to accept criticism from their opponents. In scientific terms, they did nothing wrong, but in terms of image, it was a disaster. Another mistake of scientists was to try to steamroll their adversaries

claiming a 97% of scientific consensus on human-caused climate change.

Even assuming that it is true (it may well be), it backfired, giving once more the impression that climate scientists are self-referential and do not take into account objections of other people.

Let me give you another example of a scientific debate that derailed and become a political one. I already mentioned the 1972 study "The Limits to Growth." It was a scientific study, but the debate that ensued was outside the rules of the scientific debate. A feeding frenzy among sharks would be a better description of how the world's economists got together to shred to pieces the LTG study. The "debate" rapidly spilled over to the mainstream press and the result was a general demonization of the study, accused to have made "wrong predictions," and, in some cases, to be planning the extermination of humankind. (I discuss this story in my 2011 book "The Limits to Growth Revisited.") The interesting (and depressing) thing you can learn from this old debate is that no progress was made in half a century. Approaching the 50th anniversary of the publication, you can find the same criticism republished afresh on Web sites, "wrong predictions", and all the rest.

So, we are stuck. Is there a hope to reverse the situation? Hardly. The loss of the capability of obtaining a consensus seems to be a feature of our times: debates require a

minimum of reciprocal respect to be effective, but that has been lost in the cacophony of the Web. The only form of debate that remains is the vestigial one that sees presidential candidates stiffly exchanging platitudes with each other every four years. But a real debate? No way, it is gone like the disputes among theologians in Middle Ages.

The discussion on climate, just as on all important issues, has moved to the Web, in large part to the social media. And the effect has been devastating on consensus-building. One thing is facing a human being across a table with two glasses of beer on it, another is to see a chunk of text falling from the blue as a comment to your post. This is a recipe for a quarrel, and it works like that every time.

Also, it doesn't help that international scientific meetings and conferences have all but disappeared in a situation that discourages meetings in person. Online meetings turned out to be hours of boredom in which nobody listens to anybody and everyone is happy when it is over. Even if you can still manage to be at an in-person meeting, it doesn't help that your colleague appears to you in the form of a masked bag of dangerous viruses, to be kept at a distance all the time, if possible behind a plexiglass barrier. Not the best way to establish a human relationship.

This is a fundamental problem: if you can't build a consensus by a debate, the only other possibility is to use the political method. It means attaining a majority by means of a vote (and note that in science, like in theology, voting is not considered an acceptable consensus building technique). After the vote, the winning side can force their position on the minority using a combination of propaganda, intimidation, and, sometimes, physical force. An extreme consensus-building technique is the extermination of the opponents. It has been done so often in history that it is hard to think that it will not be done again on a large scale in the future, perhaps not even in a remote one. But, apart from the moral implications, forced consensus is expensive, inefficient, and often it leads to dogmas being established. Then it is impossible to adapt to new data when they arrive.

So, where are we going? Things keep changing all the time; maybe we'll find new ways to attain consensus even online, which implies, at a minimum, not to insult and attack your opponent right from the beginning. As for a common language, after that we switched from Latin to English, we might now switch to "Googlish," a new world language that might perhaps be structured to avoid clashes of absolutes -- perhaps it might just be devoid of expletives, perhaps it may have some specific features that help build consensus. For sure, we need a reform of science that gets rid of the corruption rampant in many fields: money is a kind of consensus, but not the one we want.

Or, maybe, we might develop new rituals. Rituals have always been a powerful way to attain consensus, just think of the Christian mass (

the Christian church has not yet realized that it has received a deadly blow from the anti-virus rules). Could rituals be transferred online? Or would we need to meet in person in the forest as the "book people" imagined by Ray Bradbury in his 1953 novel "

Fahrenheit 451"?

We cannot say. We can only ride the wave of change that, nowadays, seems to have become a true tsunami. Will we float or sink? Who can say? The shore seems to be still far away.

h/t Carlo Cuppini and "moresoma"