I can visualize the three Hungarians with a strong European accent, in Washington, trying to convince the Uranium Committee: a general, an admiral, and some mature scientists. "We canna make a little bomba and it will blow up a whola city." How could they believe it? But, in August 1945, six years later, nuclear power had changed the world, quickly ended world war two, and started an industry the size of the automotive one.

After a few years, Otto Hahn became a fervent opponent of the use of atomic energy for military purposes. Even before Hiroshima, Szilard, one of the most brilliant minds of the time, was ousted from anything to do with nuclear power, he devoted himself full-time to biology and in 1962 started the Council for a Livable World, an organization dedicated to the elimination of nuclear arsenals. In a 1947 issue of The Atlantic, Einstein claimed that only the United Nations should have atomic weapons at their disposal, as a deterrent to new wars.

The essential fusion

Today, most people probably have some idea of what nuclear fusion is, even the Italian prime minister, Mr. Mario Draghi, spoke about it at a recent parliament session. Although energy can be produced by splitting uranium nuclei in two, it can also be produced by fusing light atomic nuclei. We have all been taught that this is the way the sun works and it has been repeated to boredom by people with a superficial knowledge of these processes, such as the Italian minister for the ecological transition Roberto Cingolani. But not everyone knows that if helium could be readily generated by two hydrogen atoms, our star, made of hydrogen, would have exploded billions of years ago in a giant cosmic bang. Fortunately, the fusion of hydrogen involves a "weak" reaction and is so slow and so unlikely that, even with the extraordinary conditions of the sun's core, the energy density produced by the reaction is about the same as that of a stack of decomposing manure, the kind we see smoking in the fields in winter. To radiate the low-level energy produced in its giant core the sun, almost a million kilometers in diameter, must shine at twice the temperature of a lightbulb filament when is on.

To do something useful on Earth by means of nuclear fusion, one can't use hydrogen but needs two of its rare isotopes, deuterium and tritium, not by chance the ingredients of H bombs. The promoters of fusion for pacific purposes don't mention bombs, but this is precisely what I want to talk about, the analogies between fusion now and what happened in the 1930s and 1940s.

Peaceful use?

Reading what was written by the scientists who worked in nuclear fusion in the early years of the "atomic age" shows that the development of an energy source for peaceful use, energy "too cheap to meter", is what motivated them more than anything else. The same arguments were brought forward by Claudio Descalzi, CEO of ENI, a major investor in fusion, addressing the Italian Parliamentary Committee for the Security of the Republic (COPASIR) in a hearing of December 9th 2021: fusion will offer humanity large quantities of energy of a safe, clean and virtually inexhaustible kind.

Wishful thinking: with regard to "inexhaustible," we cannot do anything in fusion without tritium (an isotope of hydrogen) which is nonexistent on this planet and most of the theoretical predictions, no experiments to date, say that magnetic confinement, the main hope of fusion, will not self-fertilize. Speaking of "clean" energy, Paola Batistoni, head of ENEA's Fusion Energy Development Division, at reactor shutdown envisages the production of hundreds of thousands of tons of materials unapproachable by humans for hundreds of years.

However, the problem I am worried about here is a military problem, mostly ignored, even by COPASIR, the Parliamentary Committee for the Security of the Republic. There are many reasons to worry about nuclear fusion: the huge amount of magnetic energy in the reactor can cause explosions equivalent to hundreds of kilograms of TNT, resulting in the release of tritium, a very radioactive and difficult to contain gas. On top of it, with the neutrons of nuclear fusion, it is possible to breed fissile materials. But the risks that seem to me most worrisome in the long run will come from new weapons, never seen before.

New Weapons

To better understand this issue, let's review how classical thermonuclear weapons work, the 70-year-old ones. Their exact characteristics are not in the public domain but Wikipedia describes them in sufficient detail. For a more complete introduction, I recommend the highly readable books by Richard Rhodes. There exist today "simple" fission bombs, which use only fissile reactions to generate energy, and "thermonuclear" bombs, which use both fission and fusion for that purpose. Thermonuclear bombs are an example of inertial confinement fusion (ICF), where everything happens so quickly that all the energy is released before the reacting matter has the time to disperse.

The New York Times recently announced advances in the field of inertial fusion at the Lawrence Livermore National Lab in California with an article reporting important findings from NIF, the National Ignition Facility. What really happened was that the 192 most powerful lasers in the world, simultaneously shining the inner walls of a gold capsule of a few centimeters, vaporize it to millions of degrees. The X-rays emitted by this gold plasma in turn heat the surface of a 3 mm fusion fuel sphere which, imploding, reaches ignition. Ignition means that the fusion reactions are self-sustaining until the fuel is used up. As described in the article, without an atom bomb trigger, a few kilograms worth of TNT thermonuclear explosion occurs as in the conceptually analogous, but vastly more powerful, H-bomb of Teller and Ulam from the fifties.

We don't have to worry about these recent results too much, for now, NIF still needs three football fields of equipment to work, nothing which one could place at the tip of a rocket or drop from the belly of an airplane, but its miniaturization is the next step.

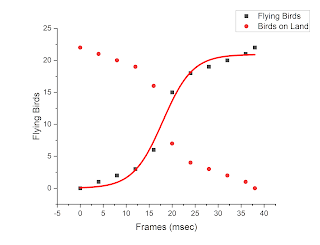

In fusion, military and civilian, particles must collide with an energy of the order of 10 keV, ten thousand electron-volts, the 100 million degrees mentioned everywhere speaking of fusion. Regarding the necessary fuel ingredients, deuterium is abundant, stable, and easily available. Tritium on the other hand, with an average life of 10 years, can't be found in nature and only a few fission reactors can produce it in small quantities. The world reserves are around 50 kg, barely enough for scientific experiments, and it's thousands of times more expensive than gold. The fusion bombs solved the tritium procurement issue by transmuting lithium 6, the fusion fuel of Fig. 1, instantaneously, by means of fission neutrons. In civilian fusion, instead, the possibility of extracting enough tritium from lithium is far from obvious. It is one of the important issues expected to be demonstrated by ITER, a gigantic TOKAMAK, the most promising incarnation of magnetic fusion, under construction in the south of France with money from all over the world but mainly from the European community. The Russians, who invented it, and the Americans, the ones with most of the experience in the field, are skeptical partners contributing less money than Italy. The NIF inertial fusion experiment, instead, is financed by the Pentagon with billions of dollars, the most expensive fusion investment to reach ignition.

Along the lines of NIF, there is also a French program, another country armed with nuclear weapopns. CEA, the Commissariat à l'Energie Atomique, Direction des Application Militaires, finances near Bordeaux the Laser Méga-Joule (LMJ), three billion euros and operational since October 2014. Investments like these show the level of military interest in fission-free fusion and so far they are the only ones who have achieved self-sustaining reactions.

Private enterprises

In the private field, First Light Fusion, a British company, has already invested tens of millions to carry out inertial fusion by striking a solid fuel target with a tennis ball size bullet. The experimental results consist, for now, of just a handful of neutrons. The amount of heat generated is, so far, undetectable, but the energy of the neutrons, 2.45 MeV, corresponds to the fusion of deuterium, the material of the target. I cited First Light Fusion to indicate that there is interest in inertial fusion even in private companies outside nuclear weapons national laboratories. Marvel Fusion, based in Bavaria, is another private enterprise claiming a new way to inertial confinement ignition.

For those wondering if the 12 orders of magnitude of difference for the density of the fuel needed in comparison to that of solid matter, and that of TOKAMAK, the one of a good lab vacuum, hide alternative methods to carry out nuclear fusion for peaceful and military purposes, the answer is certainly positive. Until now, in academia, before the advent of entrepreneurs' fusion, no proposal seemed attractive enough to be seriously pursued experimentally. The panorama could change in years to come, the proposal of General Fusion, Jeff Bezos's company to be clear, is of this type: short pulses at intermediate density. One wonders if the CEO of Amazon is aware of sponsoring research with possible military applications.

Experiments

The idea of triggering fusion in a deuterium-tritium target by concentrating laser radiation, or conventional explosives, has long fascinated those who see it as a potentially unlimited source of energy and also those who consider it an effective and devastating weapon. At the Frascati laboratories of CNEN, the Comitato Nazionale per l'Energia Nucleare, now ENEA, Energia Nucleare e Energie Alternative, we find examples of experimentation of both methods in the 70s, see "50 years of research on fusion in Italy" by Paola Batistoni.

According to some sources, the idea of triggering fusion with conventional explosives, as in the Frascati MAFIN and MIRAPI experiments of the mentioned CNEN review report, was seriously considered by Russian weapon scientists in the early 1950s and vigorously pursued at the Lawrence Livermore Laboratory during the 1958-61, the years of a moratorium on nuclear testing, as part of a program ironically titled "DOVE".

According to Sam Cohen, who worked at the Manhattan Project, DOVE failed in its goal of developing a neutron bomb "for technical reasons, which I am not free to discuss." But Ray Kidder, formerly at Lawrence Livermore, says the US lost interest in the DOVE program when testing resumed because "the fission trigger was a lot easier". It didn't all end there though, it is instructive to read now an article that appeared in the NYT in 1988, which describes a nuclear experiment carried out in order to verify the feasibility of an inertial fusion explosion not triggered by fission, such as Livermore's NIF. In addition to showing the unequivocal military interest in these initiatives, the article gives an idea of the complexity, and slow pace, of their development. Nevertheless, the initiatives of the 80s seem to be bearing fruit now.

Modern nuclear devices are "boosted", they use fusion to enhance their yield and reduce their cost but the bulk of the explosive power still originates from the surrounding fissile material, not from fusion. However, there are devices where energy originates almost exclusively from fusion reactions such as the mother of all bombs, the Russian Tzar Bomb. With its 50 megatons, a multi-stage H, the addition of a tamper of fissile material would have greatly enhanced its yield but it was preferred to keep it “clean”.

It is important to underline that the H component of a thermonuclear device, unlike fissile explosives, contributes little to long-term environmental radioactivity. Uncovering the secrets of the ICF could indicate how to annihilate the enemy while limiting permanent environmental damage. It is the same reason why civilian fusion is claimed to be more attractive than fission: the final products, mostly helium, are much less radioactive than the heavy elements characteristic of fission ashes. As mentioned earlier, radioactivity nonetheless jeopardizes the usefulness of civilian fusion in other ways: a heavy neutron flux reduces the already precarious reliability of the reactor, and radioactivity protection greatly increases its cost.

Despite the rhetoric of some press advertising, the relevance of ICF for energy production is minimal for many reasons: first of all, as in the case of NIF, the primary energy, the supply power of all devices involved, is hundreds of times higher than the thermal energy produced by the reactions, the quasi-breakeven reported refers to the energy of the laser light alone. Even more importantly the micro-explosion repetition rate and the reliability necessary in a power plant constitute insurmountable obstacles.

Where do we stand?

Back to ICF, the Lawrence Livermore National Lab's NIF experiment is funded by the Department Of Defense aiming at new weapons while complying with yield limits imposed by the Comprehensive Nuclear-Test-Ban Treaty (CTBT). The Question of Pure Fusion Explosions Under the CTBT, Science & Global Security, 1998, Volume 7. pp.129-150 explains why we should be concerned about pure fusion weapons presently under investigation.

With nuclear fusion, we are witnessing a situation similar to what appeared clear to many of the scientists who participated in the development of weapons at the time of Hiroshima and Nagasaki: nuclear energy is frighteningly dangerous while potentially useful for producing energy and as a war deterrent.

With fusion, the balance between weapons and peaceful uses seems to be even more questionable, making further developments harder to justify. Fusion weapons, which will arrive earlier than reactors, are potentially more devastating than fission with a wider range to both higher and lower yields. Low-power devices, while remaining very destructive, would not carry a strong deterrent power, and the super high-power ones, hundreds and thousands of megatons, would have catastrophic consequences on a planetary level. On the other hand, electricity production by fusion seems now less and less likely to work out, economically less attractive than the already uninviting fission.

The wind and photovoltaic revolution, rendering the already proven nuclear fission obsolete despite the urgency of decarbonization, are making fusion unappealing even before it's proven to work. At the same time, possible military applications should discourage even the investigation of fusion tritium technologies. At the very least, new research regulations are needed.

It's a collective choice

Is "science" unstoppable in this instance?

First of all, I would characterize these developments as a purely technological development than a scientific one. We are talking of applications without general interest, not a frontier of science. Fusion is a "nuclear chemistry" with potentially aberrant applications, in analogy to other fields which are investigated in strict isolation. Fortunately, fusion is an economically very demanding technology, impossible to develop in a home garage. Working on fusion can be, at least for now, only a collective choice that reminds the story of the atomic bomb at the end of the 30s, but at a more advanced stage of development than when Szilard involved Einstein to reach Roosevelt. Is the genius is about to come out of the lamp?

Previously published in Italian on Scenari per il Domani, sep 14 2022