It is said that if you have a monkey pounding at the keys of a typewriter, by mere chance, eventually it will produce all the works of Shakespeare. The Library of Babel, a story by Jorge Luis Borges, is another version of the same idea: a nearly infinite repository of books formed by all the possible combinations of characters. Most of these books are just random combinations of characters that make no sense but, somewhere in the library, there is a book unraveling the mysteries of the universe, the secrets of creation, and providing the true catalog of the library itself. Unfortunately, this book is impossible to find and even if you could find it you would not be able to separate it from the infinite number of books that claim to be it but are not.

The Library of Babel (or a large number of typing monkeys) may be a fitting description of the sad state of "science" as it is nowadays. An immense machine that mostly produces nonsense and, perhaps, some gems of knowledge, unfortunately nearly impossible to find.

Mr. Luddyinski is mostly correct in his description, but he is missing some facets of the story that are even more damning than others. For instance, in his critique of science publishing, he does not mention that the scientists working as editors are paid by the publishers. So, they have an (often undeclared) conflict of interest in supporting a huge organization that siphons public money into the pockets of private organizations.

On the other hand, Luddyinski is too pessimistic about the capability of individual scientists to do something good despite all the odds. In science, there holds the general rule that things done illegally are done most efficiently. So, scientists must obey the rules if they want to be successful but, once they attain a certain degree of success, they can bend the rules a little -- sometimes a lot -- and try to rock the boat by doing something truly innovative. It is mainly in this way that science still manages to progress and produce knowledge. Some fields like astronomy, artificial intelligence, ecosystem science, biophysical economics, and several others are alive and well, only marginally affected by corruption.

Of course, the bureaucrats that govern these things are working hard at eliminating all the possible spaces where creative escapades are possible. Even assuming that they won't be completely successful, there remains the problem that an organization that works only when it ignores its own rules is hugely inefficient. For the time being, the public and the decision-makers haven't yet realized what kind of beast they are feeding, but certain things are percolating outside the ivory tower and are becoming known. Mr. Luddynski's paper is a symptom of the gradual dissemination of this knowledge. Eventually, the public will stop being mesmerized by the word "science" and may want something done to make sure that their tax money is spent on something useful rather than on a prestige competition among rock-star scientists.

(translated by Ugo Bardi)

Foreword

Science is not the scientific method. "Science" - capitalized in quotation marks - is now an institution, stateless and transnational, that has fraudulently appropriated the scientific method, made it its exclusive monopoly, and uses it to extort money from society - on its own or on behalf of third parties - after having self-proclaimed itself as the new Church of the Certification of Truth [1].

An electrician trying to locate a fault or a cook aiming to improve a recipe are applying the scientific method without even knowing what it is, and it works! It has always worked for thousands of years, that is, before anyone codified its algorithm, and it will continue to do so, despite modern inquisitors.

This writer is not a scholar, he does not cite sources out of ideological conviction, but he learned at an early age how to spell "epistemology." He then wallowed for years in the sewage of the aforementioned crime syndicate until, without having succeeded in habituating himself to the mephitic stench, he found an honorable way out. Popper, Kuhn, and Feyerabend, were all thinkers who fully understood the perversion of certain institutional mechanisms but who could not even have imagined that the rot would run so unchecked and tyrannical through society, to the point where a scientist could achieve the power to prevent you from leaving your house, or owning a car, or forcing you to eat worms. Except for TK, he had it all figured out, but that is another matter.

This paper will not discuss the role that science plays in contemporary society, its gradual becoming a cult, the gradual eroding of spaces of freedom and civic participation in its name. It will not discuss the relationship with the media and the power they have to pass off the rants of a mediocre bumptious professor as established truths. Thus, there will be no talk about the highest offices of the state declaring war on anti-science, declaring victories at plebiscites that were never called, where people are taken to the polls by blackmail and thrashing.

There will be no mention of how "real science"- that is, that which pertains to respect for the scientific method-is being raped by the international virologists and scientific and technical committees of the world, who make incoherent decisions, literally at the drop of a hat, and demand that anyone comply without question.

Nor will we discuss the tendency to engage in sterile Internet debates about the article that proves us right in the Lancet or the Brianza Medical Journal or the Manure Magazine. Nor about the obvious tendency to cherry-pick by the deboonker of the moment. "Eh, but Professor Giannetti of Fortestano is a known waffler, lol." The office cultists of the ipse dixit are now meticulous enforcers of a strict hierarchy of sources and opinions, based on improbable as rigorous qualitative prestige rankings, or worse, equally improbable as arbitrary quantitative bibliometric indices.

Here you will be told why science is not what it says it is and precisely why it has assumed such a role in society.

Everything you will find written here is widely known and documented in books, longforms, articles in popular weeklies, and even articles in peer-reviewed journals (LOL). Do a Google search for such terms as "publish or perish," "reproducibility crisis," "p-hacking," "publication bias," and dozens of other related terms. I don't provide any sources because, as I said, it is contrary to my ideology. The added value of what I am going to write is an immediate, certainly partisan and uncensored, description of the obscene mechanisms that pervade the entire scientific world, from Ph.D. student recruitment to publications.

There is also no "real science" to save, as opposed to "lasciviousness." The thesis of this paper is that science is structurally corrupt and that the conflicts of interest that grip it are so deep and pervasive, that only a radical reconstruction -after its demolition- of the university institution can save the credibility of a small circle of scholars, those who are trying painstakingly, honestly and humbly to add pieces to the knowledge of Creation.

The scientist and his career

"Scientist" today means a researcher employed in various capacities at universities, public or private research centers, or think tanks. That of the scientist is an entirely different career from that of any worker and is reminiscent of that of the cursus honorum of the Roman senatorial class. Cursus honorum my ass. A scientist's CV is different from that of any other worker. Incidentally, the hardest thing for a scientist is to compile a CV intelligible to the real world, should he or she want to return to earning an honest living among ordinary mortals. Try doing a Europass after six years of post-doc. LOL.

The scientist is a model student throughout his or her school career first and college career later. Model student means disciplined, with grades in the last percentiles of standardized tests, and also good interpersonal skills. He manages to get noticed during the last years of university (so-called "undergraduate," which however strangely, in Italy is called the magistrale or specialistica or whatever the fuck it is called nowadays), until he finds a recommendation (intended in the bad sense of the term) from a professor to enroll in a Ph.D. program, or doctorate, as it is called outside the Anglosphere. The Ph.D. is an elite university program, three to six years long, depending on the country and discipline, where one is trained to be a "scientist."

During the Ph.D. in the first few years, the would-be scientist takes courses at the highest technical level, taught by the best professors in his department, which are complemented by an initial phase of initiation into research, in the form of becoming a "research assistant," or RA. This phase, which marks the transition from "established knowledge" of textbooks to the "frontier of scientific research," takes place explicitly under the guidance of a professor-mentor, who will most likely follow the doctoral student through the remainder of the doctoral course, and almost always throughout his or her career. The education of crap. Not all doctoral students will, of course, be paired with a "winning" mentor, but only those who are more determined, ambitious, and show vibrancy in their courses.

During this RA phase, the student is weaned into what are the real practices of scientific research. It is a really crucial phase; it is the time when the student goes from the Romantic-Promethean-Faustian ideals of discovering the Truth, to nights spent fiddling with data that make no sense, amid errors in the code and general bewilderment at the misplaced meaning. Why am I doing all this? Why do I have to find just THAT result? Doesn't this go against all the rationalist schemata I was sharing on Facebook two years ago when I was pissing off the flat-earthers on duty? What do you mean if the estimate does not support the hypothesis, then I have to change the specification of the model? It is at this point that the young scientist usually asks the mentor some timid questions with a vague "epistemological" flavor, which are usually evaded with positivist browbeating about science proceeding by trial and error, or with a speech that sounds something like this: "You're going to get it wrong. There are two ways of not understanding: not understanding because you are trying to understand, or not understanding by pissing off others. Take your pick. In October, your contract runs out."

The purest, at this point, fall into depression, from which they will emerge by finding an honest job, with or without a piece of paper. The careerists, psychopaths, and naive fachidioten, on the other hand, will pick up speed and run along the tracks like bullet trains, unstoppable in their blazing careers devoted to prestige.

After the first phase of RA, during which the novice contributes to the mentor's publications-with or without mention among the authors, he or she moves on to the next, more mature phase of building his/her own research pipeline, collaborating as a co-author with the mentor and his other co-authors, attending conferences, weaving a relational network with other universities. The culmination of this phase is the attainment of the Ph.D. degree, which, however, at this point, is nothing more than a formality, since the doctoral student's achievements speak for themselves. The "dissertation," or "defense," in fact, will be nothing more than a seminar where the student presents his or her major work, which is already publishable.

But now the student is already launched on a meteoric path: depending on discipline and luck, he will have already signed a contract as an "assistant professor" in a "tenure track," or as a "post-doc." His future career will thus be determined solely by his ability to choose the right horses, that is, the research projects to bet on. Indeed, he has entered the hellish world of "publish or perish," in which his probability of being confirmed "for life" depends solely on the number of articles he manages to publish in "good" peer-reviewed journals. In many hard sciences, the limbo of post-docs, during which the scientist moves from contract to contract like a wandering monk, unable to experience stable relationships, can last as long as ten years. The "tenure track," or the period after which they can decide whether or not to confirm you, lasts more or less six years. A researcher who manages to become an employee in a permanent position at a university before the age of 35-38 can consider himself lucky. And this is by no means a peculiarity of the Italian system.

After the coveted "permanent position" as a professor, can the researcher perhaps relax and get down to work only on sensible, quality projects that require time and patience and may not even lead anywhere? Can he work on projects that may even challenge the famous consensus? Does he have time to study so that he can regain some insight? Although the institution of "tenure," i.e., the permanent faculty position, was designed to do just that, the answer is no. Some people will even do that, aware that they end up marginalized and ignored by their peers, in the department and outside. Not fired, but he/she ends up in the last room in the back by the toilet, sees not a penny of research funding, is not invited to conferences, and is saddled with the lamest PhD students to do research. If he is liked, he is treated as an eccentric uncle and good-naturedly teased, if disliked he is first hated and then ignored.

But in general, if you've survived ten years of publish or perish -- and not everyone can -- it's because you like doing it. So you will keep doing it, partly because the rewards are coveted. Salary increases, committee chairs, journal editor positions, research funds, consultancies, invitations to conferences, million-dollar grants as principal investigator, interviews in newspapers and on television. Literally, money and prestige. The race for publications does not end; in fact, at this point, it becomes more and more ruthless. And the scientist, now a de facto manager of research, with dozens of Ph.D. students and postdocs available as workforce, will increasingly lose touch with the subject of research to become interested in academic politics, money, and public relations. It will be the doctoral student now who will "get the code wrong" for him until he finds the desired result. He won't even have to ask explicitly, in most cases. Nobody will notice anyway, because nobody gives a damn. But what about peer review?

The peer-review

Thousands of pages have been written about this institution, its merits, its flaws, and how to improve it. It has been ridiculed and trolled to death, but the conclusion recited by the standard bearers of science is always the same: it is the best system we have, and we should keep it. Which is partly true, but its supposed aura of infallibility is the main cause of the sorry state the academy is in. Let's see how it works.

Our researcher Anon has his nice pdf written in Latex titled "The Cardinal Problem in Manzoni: is it Betrothed or Behoofed?" (boomer quote) and submits it to the Journal of Liquid Bullshit via its web platform. The JoLB chief editor takes the pdf, takes a quick look, and decides which associate editor to send it to, depending on the topic. The chosen editor takes another quick look and decides whether to 1) send a short letter where he tells Anon that his work is definitely nice and interesting but, unfortunately it is not a good fit for the Journal of Liquid Bullshit, and he recommends similar outlets such as Journal of Bovine Diarrhea, or Liquid Bovine Stool Review or 2) send it to the referees. But let's pause for a moment.

What is the Journal of Liquid Bullshit?

The Journal of Liquid Bullshit is a "field journal" within the general discipline of "bullshit" (Medicine, Physics, Statistics, Political Science, Economics, Psychology, etc.), dealing with the more specialized field of Liquid Bullshit (Virology, Astrophysics, Machine Learning, Regime Change, Labor Economics, etc.). It is not a glorious journal like Science or Nature, and it is not a prestigious journal in the discipline (Lancet, JASA, AER, etc). But it is a good journal in which important names in the field of liquid crap nonetheless publish regularly. In contrast to the more prestigious ones, which are generally linked to specific bodies or university publishers, most of these journals are published (online, that is) by for-profit publishers, who make a living by selling subscription packages to universities at great expense.

Who is the chief editor? The editor is a prominent personality in the Liquid Bullshit field. Definitely, a Full Professor, minimum of 55 years old, dozens of prominent publications, highly cited, has been keynote speaker at several annual conferences of the American Association of Liquid Bovine Stools. During conferences, he constantly has a huddle of people around him.

Who are the Associate editors?

They are associate or full professors with numerous publications, albeit relatively young. Well connected, well launched. The associate editor's job is to manage the manuscript at all stages, from choosing referees to decisions about revisions. The AE is the person who has all the power over the paper, not only of course for the final decision, but also for the choice of referees.

Who are the referees

Referees are academic researchers formally contacted by the AE to evaluate the paper and write a short report. Separately, they also send a private recommendation to the AE on the fate of the paper: acceptance, revision, or rejection. The work of the referees is unpaid; it is time taken away from research or very often from free time. The referee receives only the paper; he or she has no way to evaluate the data or codes, unless (which is very rare) the authors make them available on their personal web pages before publication. It is difficult in any case for the referee to waste time fiddling around. In fact, the evaluation required of the referee is only of a methodological-qualitative nature, and not of merit. In fact, the "merit" evaluation would be up to the "scientific community," which, by replicating the authors' work, under the same or other experimental conditions, would judge its validity. Even a child would understand that there is a conflict of interest as big as a house. In fact, the referee can actively sabotage the paper, and the author can quietly sabotage the referees when it is their turn to judge.

In fact, when papers were typed and shipped by mail, the system used was "double-blind" review. The title page was removed from the manuscript: the referee did not know who was judging, and the author did not know who the referee was. Since the Internet has existed, however, authors have found it more and more convenient to publicize their work in the "workings paper" format. There are many reasons for doing so, and I won't go into them here, but it is now so widespread that referees need only do a very brief google search to find the working paper and, with it, the authors' names. By now, more and more journals have given up pretending to believe in double-blind reviewing, and they send the referees the pdf complete with the title page. Thus referees are no longer anonymous referees, especially since they have strong conflicts of interest. For example, the referee may get hold of the paper of a friend or colleague of his or her, or a stranger who gives right - or wrong - to his or her research. A referee may also "reveal" himself years later to the author, e.g. over a drink, during alcoholic events at a conference, obviously in case of a favorable report. You know, I was a referee of your paper at Journal X. It can come in handy if there is some confidence. Let's not forget that referees are people who are themselves trying to get their papers published in other journals, or even the same ones. And not all referees are the same. A "good" referee is a rare commodity for an editor. The good referee is the one who responds on time, and writes predictable reports. I have known established professors who boasted that they receive dozens of papers a month to referee "because they know I reject them all right away."

So how does this peer review process work?

We should not imagine editors as people who are removed from the real world, just with their hands extremely full. An editor, in fact, has enormous power, and one does not become an editor unless he or she shows that he or she covets this power, as well as having earned it, according to the perverse logic of the cartel. The editor's enormous power lies in influencing the fate of a paper at all stages of revision. He can choose referees favorable or unfavorable to a paper: scientific feuds and their participants are known, and are especially known to editors. It can also side with the minority referee and ask for a review (or rejection).

Every top researcher knows which editors are friendly, and knows which potential referees can be the most dangerous enemies. Articles are often calibrated, trying to suck up to the editor or potential referees by winking at their research agendas. Indeed, it is in this context that the citation cow market develops: if I am afraid of referee Titius, I will cite as many of his works as possible. Or another strategy (valid for smaller papers and niche works) is to ignore him completely, otherwise the neutral editor might choose him as referee simply by scrolling through the bibliography. Many referees also go out of their way at the review stage to pump up their citations essentially pushing authors to cite their work, even if it is irrelevant. But this happens in smaller journals. [2]

The most relevant thing to understand about the nature of peer review is how it is a process in which individuals who are consciously and structurally conflicted participate. The funny thing is that this same system is also used to allocate public research funds, as we shall see.

The fact that the occasional "inconvenient" article manages to break through the peer review wall should not, of course, deceive: the process, while well-guided, still remains partly random. A referee may recommend acceptance unexpectedly, and the editor can do little about it. In any case, little harm: an awkward article now and then will never contribute to the creation of consensus, the one that suits the ultimate funding apparatus, and indeed gives the outside observer the illusion that there is frank debate.

But so can one really rig research results and get away with it?

Generally yes, partly because you can cheat at various levels, generally leaving behind various screens of plausible deniability, i.e., gimmicks that allow you to say that you were wrong, that you didn't know, that you didn't do it on purpose, that it was the Ph.D. student, that the dog ate your raw data. Of course, in the rare case that you get caught you don't look good, and the paper will generally end up "retracted." A fair mark of infamy on a researcher's career, but if he can hide the evidence of his bad faith he will still retain his professorship, and in a few years everyone will have forgotten. After all, these are things that everyone does: sin, stones, etc.

Every now and then, more and more frequently, retraction of some important paper happens, where it turns out that the data of some well-published work was completely made up, and that the results were too good to be true. This happens when some young up-and-coming psychopath exaggerates, and draws too much attention to himself. These extreme cases of outright fraud are certainly more numerous than those discovered, but as mentioned, you don't need to invent the data to come up with a publishable result, just pay a monkey to try all possible models on all possible subsets. The a posteriori justification of why you excluded subset B can always be found. You can even omit it if you want to, because if you haven't stepped on anyone's toes, there is no way that anyone is going to start fleecing your work. Even in the experimental sciences it can happen that no one has been able to replicate the experiments of very important papers, and it took them fifteen years to discover that maybe they had made it all up. Go google "Alzheimer scandal" LOL! There is no real incentive in the academy to uncover bogus papers, other than literally bragging about them on twitter.

Research funding

Doing research requires money. There is not only laboratory equipment, which is not needed in many disciplines anyway. There is also, and more importantly, the workforce. Laboratories and research in general are run by underpaid people, namely Ph.D. students and post-docs (known in Italy as "assegnisti"). But not only that, there are also expenses for software, dataset purchase, travel to conferences, seminars, and workshops to be organized, with people to be invited and to whom you have to pay travel, hotel, and dinner at a decent restaurant. Consider the important PR side of this: inviting an important professor, perhaps an editor, for a two-day vacation and making a "friend" of him or her can always come in handy.

In addition to all this, there is the fact that universities generally keep a share of the grants won by each professor, and with this share, they do various things. If the professor who does not win grants needs to change his chair, or his computer, or wants to go present at a loser conference where he is not invited, he will have to go hat in hand to the department head to ask for the money, which will largely come from the grants won by others. I don't know how it works in Italy, but in the rest of the world, it certainly does. This obviously means that a professor who wins grants will have more power and prestige in the department than one who does not win them.

In short, winning grants is a key goal for any ambitious researcher. Not winning grants, even for a professor with tenure, implies becoming something of an outcast. The one who does not win grants is the odd one out, with shaggy hair, who has the office down the hall by the toilet. But how do you win grants?

Grants are given by special agencies -- public or private -- for the disbursement of research funds, which publish calls, periodic or special. The professor, or research group, writes a research project in which he or she says what he or she wants to do, why it is important, how he or she intends to do it, with what resources, and how much money he or she needs. The committee, at this point, appoints "anonymous" referees who are experts in the field, and peer review the project. It doesn't take long to realize that if you are an expert in the field, you know very well who you are going to evaluate. If you have read this far, you will also know full well that referees have a gigantic conflict of interest. In fact, all it takes is one supercilious remark to scuttle the rival band's project, or to favor the friend with whom you share the same research agenda, while no one will have the courage to scuttle the project of the true "raìs" of the field. Finally, the committee will evaluate the applicant's academic profile, obviously counting the number and prestige of publications, as well as the totemic H-index.

So we have a system where those who get grants publish, and those who publish get grants. All is governed by an inextricable web of conflicts of interest, where it is the informal, and ultimately self-interested, connections of the individual researcher that win out. Informal connections that, let us remember, start with the Ph.D. What is presented as an aseptic, objective, and informal system of meritocratic evaluation resembles at best the system for awarding the contract to resurface the bus parking lot of a small town in the Pontine countryside in the 1980s.

The research agenda

We have mentioned it several times, but what really is a research agenda? Synthetically we can say that the research agenda is a line of research in a certain sub-field of a sub-discipline, linked to a starting hypothesis, and/or a particular methodology. This starting hypothesis will always tend to be confirmed by those pursuing the agenda. The methodology, on the other hand, will always be presented as decisive and far superior to the alternatives. A research agenda, to continue with the example from before, could be the relationship between color and specific gravity of cattle excrement and milk production. Or non-parametric methods to estimate excreta production given diet composition.

Careers are built or blocked around the research agenda: a researcher with a "hot" agenda, perhaps in a relatively new field, will be much more likely to publish well, and thus obtain grants, and thus publish well. You are usually launched on the hot agenda during your doctoral program, if the advisor advises you well. For example, it may be that the advisor has a strand in mind, but he doesn't feel like setting out to learn a new methodology at age 50, so he sends the young co-author ahead. Often, then, the 50-year-old prof, now a "full professor," finds himself becoming among the leaders of a "cutting-edge" research strand without ever really having mastered its methodologies and technicalities, thus limiting himself to the managerial and marketing side of the issue.

As already explained, real gangs form around the agendas, acting to monopolize the debate on the topic, giving rise to a real controlled pseudo-debate. The bosses' "seminal" articles can never be totally demolished; if anything, they can be enriched, and expanded. One will be able to explore the issues from another point of view, under other dimensions, using new analytical techniques, different data, which will lead to increasingly different conclusions. The key thing is that no one will ever say "sorry guys but we are wasting time here." The only time wasted in the academy is time that does not lead to publications and, therefore, grants.

But then who dictates the agenda? "They" dictate it - directly - the big players in research, that is, the professors at the top of their careers internationally, editors of the most prestigious journals. Indirectly, then, the ultimate funders of research, direct and indirect, dictate it: multinational corporations and governments, both directly and indirectly, through the actions of lobbies and various international potentates.

The big misconception underlying science, and thus the justification of its funding in the face of public opinion, is that this incessant and chaotic "rush to publish" nonetheless succeeds in adding building blocks to knowledge. This is largely false.

In fact, research agendas never talk to each other, and above all, they never seem to come to a conclusion. After years of pseudo-debate the agenda will have been so "enriched" by hundreds of published articles that trying to make sense of it would be hard and thankless work. Thankless because no one is interested in doing this work. Funds have been spent, and chairs have been taken. In fact, the strand sooner or later dries up: the topic stops being fashionable, thus desirable to top journals, it gradually moves on to smaller journals, new Ph.D. students will stop caring about it, and if the leaders of the strand care about their careers, they will move on to something else. And the merry-go-round begins again.

The fundamental questions posed at the beginning of the agenda will not have been satisfactorily answered, and the conceptual and methodological issues raised during the pseudo-academic debate will certainly not have been resolved. An outside observer who were to study the entire literature of a research strand could not help but notice that there are very few "take-home" results. Between inconclusive results, flawed or misapplied methodologies, the net contribution brought to knowledge is almost always zero, and the qualitative, or "common sense" answer always remains the best.

Conclusions

We have thus far described science. Its functioning, the actors involved in it and their recruitment. We have described how conflicts of interest - and moral and substantive corruption - govern every aspect of the academic profession, which is thus unable, as a whole, to offer any objective, unbiased viewpoint, on anything.

The product of science is a giant pile of crap: wrong, incomplete, blatantly false and/or irrelevant answers. Answers to questions that in most cases no one in their right mind would want to ask. A mountain of shit where no one among those who wallow in it knows a shit. No one understands shit, and poorly asked questions are given in return for payment-piloted answers. A mountain of shit within which feverish hands seek-and find-the mystical-religious justification for contemporary power, arbitrariness and tyranny.

Sure, there are exceptions. Sure, there is the prof. who has gone through the net of the system, and now thanks to the position he gained, he has a platform to say something interesting. But he is an isolated voice, ridiculed, used to stir up the usual two minutes of TV or social hatred. There is no baby to be saved in the dirty water. The child is dead. Drowned in the sewage.

The "academic debate" is now a totally self-referential process, leading to no tangible quantitative (or qualitative) results. All academic research is nothing but a giant Ponzi scheme to increase citations, which serve to get grants and pump up the egos and bank accounts -- but mostly egos -- of professional careerists.

Science as an institution is an elephantine, hypertrophied apparatus, corrupt to the core, whose only function - besides incestuously reproducing itself - is to provide legitimacy for power. Surely at one time it was also capable of providing the technical knowledge base necessary for the reproduction and maintenance of power itself. No longer today, the unstoppable production of the shit mountain makes this impossible. At most, it manages to select, nominally and by simply assigning an attendance token through the most elite schools, the scions of the new ruling class.

When someone magnifies the latest scientific breakthrough to you, the only possible response you can give is, "I'm not buying anything, thank you." If someone tells you that they are acting "according to science," run away.

[1] Advising Galilei to talk about "hypothesis" was Bellarmine. In response, Galilei published the ridiculous "dialogue," where the evidence brought to support his claims about heliocentrism was nothing more than conjecture, completely wrong, and without any empirical basis. The Holy Office's position was literally, "say whatever you like as long as you don't pass it off as Truth." Galilei got his revenge: now they say whatever they like and pass it off as Truth. Sources? Look them up.

[2] Paradoxically, in the lower-middle tier of journals, where cutthroat competition is minimal, one can still find a few rare examples of peer review done well. For example, I was once asked to referee a paper for a smaller journal, with which I had never had anything to do and whose editor I did not know even indirectly. The paper was sent without a title page therefore anonymous, and strangely I could not find the working paper online. It was objectively rubbish, and I recommended rejection.

________________________________________________________________

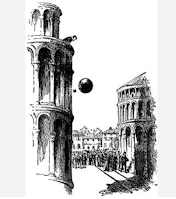

Galileo's paradigmatic idea was an experiment about the speed of falling objects. It is said that he took two solid metal balls of different weights and dropped them from the top of the Pisa Tower. He then noted that they arrived at the ground at about the same time. That allowed him to lampoon an ancient authority such as Aristotle for having said that heavier objects fall faster than lighter ones (*). There followed an avalanche of insults to Aristotle that continues to this day. Even Bertrand Russel fell into the trap of poking fun at Aristotle, accused of having said that women have fewer teeth than men. Too bad that he never said anything like that.

Galileo's paradigmatic idea was an experiment about the speed of falling objects. It is said that he took two solid metal balls of different weights and dropped them from the top of the Pisa Tower. He then noted that they arrived at the ground at about the same time. That allowed him to lampoon an ancient authority such as Aristotle for having said that heavier objects fall faster than lighter ones (*). There followed an avalanche of insults to Aristotle that continues to this day. Even Bertrand Russel fell into the trap of poking fun at Aristotle, accused of having said that women have fewer teeth than men. Too bad that he never said anything like that.